OR:

Your applications are hostile, are you protecting yourself from them?

Or maybe:

The assumption that your applications are insecure and that you need to protect yourself from them should be a factor in building your security model.

The Intent:

The intent of this post is not to break new ground, but rather to describe a concept in a simple framework that can be committed to memory and become part of the 'assumed knowledge' of an organization.

The Rules:

#1 - In general, the closer a system or component is to the outside world or the more exposed the system is to the outside world (the user, the Internet or the parking lot) the less trusted it is assumed to be.

#2 - The closer a system or component is to the data (database), the higher the level of trust that must be assumed.

#3 - Any traffic or data that flows from low trust to high trust is presumed hostile. Any data flowing in that direction is assumed tainted. Any systems that are of higher trust assume that the lower trust systems are hostile. (a red arrow).

#4 - Traffic from high trust to low trust is presumed trusted. Any traffic flowing in that direction is presumed safe or trusted. (a green arrow). This one is arguable.

#5 - In between components that are of different trust levels are trust boundaries. Trust boundaries presumably are enforced by some form of access control, firewall or similar device or process. The boundaries are also assumed to be 'default deny', so that the only data or traffic that crosses the boundary is defined by and required for the application. All other data or traffic is blocked. The boundaries are also assumed to have audit logs of some kind that indicate all cases were the boundary was crossed. (a red line).

An example:

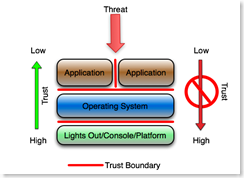

Start with a simple example. Pretend that threat is at the top of the drawing, the applications are directly exposed to the threat, and the applications are hosted on an operating system that is hosted on a platform of some kind (hardware).

The application designer and administrators must assume that the outside world (the threat, sometimes called the customer or the Internet) is hostile and therefore they design, code, configure and monitor the application accordingly. This concept should be pretty obvious, but based on the large number of XSS and SQL injection attacks, it seems to be widely unknown or ignored.

The application designer and administrators must assume that the outside world (the threat, sometimes called the customer or the Internet) is hostile and therefore they design, code, configure and monitor the application accordingly. This concept should be pretty obvious, but based on the large number of XSS and SQL injection attacks, it seems to be widely unknown or ignored.

Applications co-located on the same operating system must implicitly distrust each other (the vertical red line). Presumably they run as different userids, they do not have write privileges in the same file space, and they assume that any data that passes between them is somehow tainted and scrub it accordingly.

The operating system administrator assumes that the applications hosted on the operating system are hostile, and designs, configures, monitors and audits the operating system according. File system and process rights are configured to least bit minimized privileges, the userids used by the application are minimally privileged, and process accounting or similar logging is used to monitor attempts to break out of the garden. The operating system also enforces application isolation in cases where the operating system hosts more than one application. Chroot jails or Solaris sparse zones are examples of application isolation.

This of course is the basic operating system hardening concept or process that has evolved over the past decade and a half.

The platform (the hardware, the keyboard, the physical ports, the Lights Out console, serial console, system controller, etc.) assumes that the operating system that booted from the platform is hostile. The operating system, when assumed to be hostile, has to be prevented from access it's own console, its own lights out interfaces, or the interface that allows firmware, BIOS or boot parameters to be modified. This presumes that access to the console automatically invalidates any security on the operating system or any layer above the OS. This also encompasses physical security.

The underlying assumption is that if the platform is compromised, everything above the platform is automatically presumed to be compromised, and if the operating system is compromised, the applications are automatically presumed to be compromised.

With Virtualization:

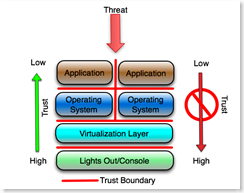

Adding virtualization doesn't change any of the concepts. It simply adds a layer that must be configured to protect itself against the presumed hostile virtual machines that it hosts (and of course the hostile applications on those virtual hosts).

The most protected interface into the system, the virtual host console or management interface, must protect itself from the process that hosts the virtual machines, (the virtualization layer) and everything above it including the hosted (guest) operating system and the hosted applications on those operating systems. The operating systems hosted by the virtualization layer must protect themselves from each other (or the virtualization layer must provide that protection) and the applications hosted by the guest operating system must protect themselves from each other.

The most protected interface into the system, the virtual host console or management interface, must protect itself from the process that hosts the virtual machines, (the virtualization layer) and everything above it including the hosted (guest) operating system and the hosted applications on those operating systems. The operating systems hosted by the virtualization layer must protect themselves from each other (or the virtualization layer must provide that protection) and the applications hosted by the guest operating system must protect themselves from each other.

This has implications on how VM's get secured and managed. The operating system that is hosted by the virtualization layer should not, under any circumstances, have access to its own virtualization layer, or any management or other interface on that layer. Of course there are lots of articles, papers and blogs on how to make that happen, or on whether that can happen at all.

As in the first example, the platform must assume that the virtualization layer is hostile and must be configured to protect itself from that layer and all layers above it, and of course if the platform is compromised, everything above the platform is automatically presumed to be compromised.

For the Application Folks:

The third example conceptualizes the application layer only, specifically for those who work exclusively at the application layer. The red lines again are trust boundaries, and presumably are enforced by some form of access control, firewall or similar device or process. The concept is essentially the same as the prior examples.

The web server administrator assumes that the Internet is hostile and designs, configures and monitors the web server according. That's pretty much a given today.

The web server administrator assumes that the Internet is hostile and designs, configures and monitors the web server according. That's pretty much a given today.

The application server administrator assumes that the web server is hostile and designs, configures and monitors the app server according. The application server administrator and the administrator of the application running on the server implicitly distrust the web server. The presumption is that any traffic from the web server has been tainted.

The DBA assumes that the application running on the app servers is hostile and designs, configures, monitors and audits the database accordingly. This means that among other things, the accounts provisioned in the database for the application are minimally privileged (least bit, not database owner or DBA) and carefully monitored by the DBA, and that 'features' like the ability to execute operating system shells and access critical database information are severely restricted. This is fundamental to deterring SQL injection and similar application layer attacks.

The database server administrator assumes that the database instance is hostile, and designs, configures and monitors the server operating system accordingly. For example the database userids do not have significant privileges on the server operating system, the file systems are configured with minimal privileges (least bit, not '777' or 'Full Control'), and the ability to shell out of the database to the operating system is restricted. The server administrator assumes that neither the application, the application servers or the database are trusted and protects the server against those assumptions. (A simple example - the database server administrator does not permit interactive logins, remote shells or RPC from the assumed hostile application or web servers.)

Conclusion:

The concept is really simple. Keep it in mind when you are at the whiteboard.

I can't be the only blogger in the universe who isn't mentioning the iPhone this weekend, so here goes. Apparently from what I read, accessing the platform (the hardware/firmware/console) pretty much invalidates all of the iPhone security at the operating system and application layer. That lines up pretty well with the concepts in this article.

Interesting, Mike. These are good rules and examples to carry with me next time I discuss or explain trust boundaries.

ReplyDeleteI remain troubled by how problematic Rule #4 can be. As an app developer, I don't necessarily trust the database. Or the application server, for that matter. It's comforting (and common) to assume that the database is fully trusted, but there's no telling what else has been mucking with what's in there. It's an external component to the application -- or at least usually is, and is in your diagram. And that's where it gets messy, where multiple components are joined in a composite application and there's no clear line to draw a trust boundary, so the unidirectional line of trust isn't /quite/ enough. But it's a fine starting point.

I'd agree that #4 is problematic, but from I can see, in most cases it is unavoidable. If the app server is compromised, it'd be pretty hard to consider the application still trustworthy, unless there was some mechanism for the application to verify it's own integrity without using OS tools or libraries.

ReplyDeleteIn the case of the application to database relationship though, presumably an application could check returned data, and if it finds interesting things like unexpected script tags, it could take reasonable action.

There certainly is room for refinement.

Thanks for the comment.

Sorry, I fell victim to a terminology confusion that tripped me up several times while reading this post. When I read "application server," I immediately jump to something like JBoss or GlassFish, not the physical box + OS.

ReplyDeleteI am intrigued by the notion of an application verifying its own integrity. I wonder what it would even mean for software not to trust itself. I have an inkling that someone has worked on this, buried in the annals of computer science research.

Hmmm ... couple things:

ReplyDeletewhere to place (and maybe even how to name) a utility /administrative access point that enables admins to move across the os/db/application boundary(ies)? Obviously, such a system assumes significant trust, but poses a larger challenge to hardening/monitoring/logging.

Then (and this may be next steps), how to whiteboard or otherwise spec responsibilities for testing these hostility assumptions. Related, I'm sure to issues with testing in patching cycles, or more comically: http://xkcd.com/327/ , but - given all these hostility assumptions, there may be some reasons to re-visit the human factor calculus.