We recently hit a major ZFS bug, causing the worst system outage of my 20 year IT career. The root cause:

Synchronous writes on ZFS file systems prior to Solaris 10 Update 8 are not properly committed to stable media prior to returning from fsync() call, as required by POSIX and expected by Oracle archive log writing processes.

On pre Update 8 MU8 + 142900-09[1], we believe that a programs utilizing fsync() or O_DSYNC writes to disk are displaying buffered-write-like behavior rather than un-buffered synchronous writes behavior. Additionally, when there is a disk/storage interruption on the zpool device and a subsequent system crash, we see a "rollback" of fsync() and O_DSYNC files. This should never occur, as write with fsync() or O_DSYNC are supposed to be on stable media when the kernel call returns.

If there is a storage failure followed by a server crash[2], the file system is recovered to an inconsistent state. Either blocks of data that were supposedly synchronously written to disk are not, or the ZFS file system recovery process truncates or otherwise corrupts the blocks that were supposedly synchronously written. The affected files include Oracle archive logs.

We experienced the problem on an ERP database server when an OS crash caused the loss of an Oracle archive log, which in turn caused an unrecoverable Streams replication failure. We replicated problem in a test lab using a v240 with the same FLAR, HBA’s, device drivers and a scrubbed copy of the Oracle database. After hundreds of test and crashes over a period of weeks, were able to re-create the problem with a 50 line ‘C’ program that perform synchronous writes in a manner similar to the synchronous writes that Oracle uses to ensure that archive logs are always consistent, as verified by dtrace.

The corruption/data loss is seen under the following circumstances:

- Run a program that synchronously writes to a file

OR

Run a program that asynchronously write to a file with calls to fsync().

- Followed by any of:[2]

- SAN LUN un-present

- SAN zoning error

- local spindle pull

- Then followed by:

- system break or power outage or crash recovery

Post recovery, in about half or our test cases, blocks that were supposedly written by fsync are not on disk after reboot.

As far as I can tell, Sun has not issued any sort of alert on the data loss bugs. There are public references to this issue, but most of them are obscure and don’t clearly indicate the potential problem:

- “MySQL cluster redo log gets truncated after a uadmin 1 0 if stored on zfs” (Sunsolve login required)

- “zfs has problems after a panic”

- “ZFS dataset sometimes ends up with stale data after a panic :

- “kernel/zfs zfs has problems after a panic - part II” Which contains a description: "But sometimes after a panic you see that one of the datafile is completely broken, usually on a 128k boundary, sometimes it is stale data, sometimes it is complete rubbish (deadbeef/badcafe in it, their system has kmem_flags=0x1f), it seems if you have more load you get random rubbish."

And:

- “fsync on zfs is broken if writes are greater than 32kb on a hard crash and no log attached”

From the Sun kernel engineer that worked our case:

Date 08-MAR-2010

Task Notes : […] Since S10U8 encompasses 10+ PSARC features and 300+ CR fixes for ZFS, and the fixes might be inter-related, it's hard to pinpoint exactly which ones resolve customer's problem.

For what it’s worth, Sun support provided no useful assistance on this case. We dtrace’d Oracle log writes, replicated the problem using an Oracle database, and then – to prevent Sun from blaming Oracle or our storage vendor - replicated the data loss with a trivial ‘C’ program on local spindles.

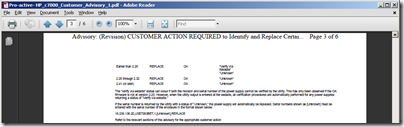

Once again, if you are on Solaris 10 pre Update 8 Update 8 + 142900-09[1] and you have an application (such as a database) that expects synchronous writes to still be on disk after a crash, you really need to run a kernel from Update 8 or newer (Oct 2009) Update 8 + 14900-09 dated 2010-04-22 or newer[1] .

[1] 2010-04-25: Based on on new information and a reading of 142900-09 released Apr/20/2010, MU8 alone doesn’t fully resolve the known critical data loss bugs in ZFS.

The read is that there are two distinct bugs, one fsync() related, the other sync() related. Update 8 may fix “6791160 zfs has problems after a panic”, but

Bug ID 6880764 “fsync on zfs is broken if writes are greater than 32kb on a hard crash and no log attached”

is not resolved until 142900-09 on 2010-04-22.

Another bug that is a consideration for an out of order patch cycle and rapid move to 142900-09:

Bug ID 6867095: “User applications that are using Shared Memory extensively or large pages extensively may see data corruption or an unexpected failure or receive a SIGBUS signal and terminate.”

This sounds like an Oracle killer.

[2]Or apparently a server crash alone.