Why is outsourcing to ‘the cloud’ any different than what we’ve been doing for years?

The answer:

It isn’t.

We’ve been outsourcing critical infrastructure to cloud providers for decades. This isn’t a new paradigm, it’s not a huge change in the way we are deploying technology. It’s pretty much the same thing we’ve always been doing. It’s just moved up the technology stack.

We’ve been outsourcing layer 1 forever (WAN circuits), layer 2 for a couple decades (frame relay, ATM, MPLS), and sometimes even layer 3 (IP routing, VPNs’) to cloud providers. Now we have something new –

outsourcing layers 4 through 7 to a cloud provider.

So we are scratching our heads trying to figure out what this ‘new’ cloud should look like, how to fit our apps into a cloud and

what the cloud means[1] for security, availability and performance. Heck we’re not even sure how to

patch the cloud[2], or even who is responsible for patching a cloud.

I’ll argue that outsourcing CPU, database or storage to a server/application cloud isn’t fundamentally different than outsourcing transport to an MPLS cloud, as practically everyone with a large footprint is already doing. In both cases you’ve entrusted your bits to someone else, you’ve shared physical and logical resources with others, you’ve disassociated physical devices (circuits or servers) from logical devices (virtual circuits, virtual severs), and in exchange for what is hopefully better, faster, cheaper service, you give up visibility, manageability and control to a provider.

What would happen if we took a look at the parts of our infrastructure that are already outsourced to a cloud provider and see if we can apply lessons learned from layers 1 through 3 to the rest of the stack.

Lesson 1: The provider matters. We use both expensive Tier 1 providers and cheap local transport providers for a reason. We have expectations of our providers and we have SLA’s that cover among other things, availability, management, reporting, monitoring, incident handling and contract dispute resolution. When a provider fails to live up to SLA’s, we find another provider (See Lesson 3). If we’ve picked the right provider, we don’t worry about their patch process. They have an obligation to maintain a secure, available, reliable service, and when they don’t, we have means to redress the issue.

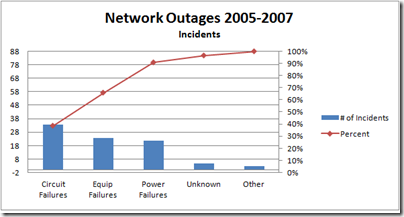

Lesson 2: Design for failure. We provision multiple Tier 1 ISP’s to multiple network cores for a reason. The core is spread out over 4 sites in two cities for a reason. We have multiple providers, multiple paths of 10 Gig’s between the cores for a reason. We use two local providers to each hub for a reason. The reason is – guess what –

sh!t happens!. But we know that if we do it correctly, we can loose a 10 Gig connection to a Tier 1 and nobody will know, because we

designed for failure. And when we decide to cut costs and use a low cost provider or skimp on redundancy, we accept the increased risk of failure, and presumably have a plan for dealing with it.

Lesson 3: Deploy a standard technology. We don’t care if our MPLS providers use Juniper, Cisco or Extreme for layer 2 transport,

because it doesn’t matter. We don’t deploy vendor specific technology, we deploy standardized interoperable technology. We all agree on what a GigE handoff with jumbo MTU’s and single mode long reach lasers looks like. It’s the same everywhere. We can bring in a new ISP or backbone transport provider, run the new one in parallel to the old, seamlessly cut over to the new, and not even tell our customers.

What parallels can we draw as we move the cloud up the stack?

- My provider doesn’t prioritize my traffic (CPU, memory, disk I/O): Pay them for QoS. Priority bits (CPU cycles, I/O’s) cost more that ‘best effort’ bits (CPU cycles, I/O’s). They always have and always will.

- My provider doesn’t provide reliable transport (CPU, Memory, Operating Systems, App Servers, Databases): Get a Tier 1 network provider (cloud provider), or get two network providers (cloud providers) and run them in parallel.

- My provider might not have enough capacity: Contract for burst network (CPU, I/O) capacity. Contract and pay for the ability to determine which bits(apps) get dropped when oversubscribed. Monitor trends and anticipate growth and load, and add capacity proactively.

- My provider might go bankrupt or have catastrophic failure of some sort: You’ve got a plan for that, right? They call it a backup network provider (cloud host). And your app is platform and technology neutral so you can seamlessly move your app to the new provider, right?

- My provider might not have a secure network (Operating System, Database): Well, you’ll just have to encrypt your traffic (database) and harden you edge devices (applications) against the possibility that the provider isn’t secure.

Instead of looking back at what we are already doing and learning from what we’ve already done, we are acting like this is something totally new. It isn’t totally new.

It’s just moved up the stack.

The real question: Can the new top of stack cloud providers match the security, availability and reliability of the old layer 1-2-3 providers?

[1]Techbuddha, Cloud Computing, the Good, The Bad, and the Cloudy, Williams

[2] Rational Survivability, Patching The Cloud?, Hoff