This post introduces the estimations. Part One covers non-redundant systems. Part Two covers simple redundant systems.

Assumptions:

I'll start out with the following assumptions:

(1) That you have a basic understanding of MTBF and MTTR.

(2) That your SLA allows for maintenance windows. Maintenance windows, if you are fortunate enough to still have them, allow 'free' outages, provided that the outage can be moved to the maintenance window.

(3) That you have the principles laid out in my availability post implemented, including:

- Tier 1 hardware with platform management software installed, configured and tested. (Increases MTBF, Decreases MTTR)

- Stable power, UPS and generator. (Increases MTBF)

- Staff are available during SLA hours, either officially working or available via some form of on-call. (Decreases MTTR)

- Structured System Management implemented. (Increases MTBF, Decreases MTTR)

- Service contracts that match application SLA's. (Decreases MTTR)

- You have well designed, reliable power and cooling. (Increases MTBF)

- You have remote management, lights out boards and remote consoles on all devices.

(5) That you understand where you have coupled dependencies. Examples:

- In a typical redundant router -> switch -> firewall stack, a failure of the switch will cause interfaces on the router and firewall to fail. Those interface failures will trigger an HA failover on both the HA router pair and the HA firewall pair.

- In a typical dual powered rack, devices with single power supplies configured in HA pairs and are connected such that power failure of one side of the racks leaves the correct half of the HA pair still running.

(6) That you have Tier 1 hardware from a major manufacturer with platform management software installed, configured and tested. You need the predictive failure messages that modern servers are capable of generating showing up on your SMS device. Predictive failure doesn't change the rate at which components fail, but it allows you you proactively schedule replacement of failed components during maintenance windows. This gives you 'freebie' failed component replacement. If you do not have Tier 1 hardware and do not have platform management software configured, your failure rate and your recovery time will will both increase.

(7) That staff are available during SLA hours, either officially working or available via some kind of on-call.

The estimates will:

- Be conservative estimates, with plenty of room for error.

- Include operating system issues.

Include hardware issues

The major components I'll cover are:

- Wan Links

- Routers, switches, firewalls and similar devices

- SAN fabrics

- SAN LUNs

- Servers

- Power and Cooling

Excluded from the estimates are:

- Databases, database issues, database performance problems.

- Web Applications, including performance problems, configuration problems.

Once-in-a lifetime events (like an Interstate bridge falling down 100 meters from your data center). - The human factor.

A handy chart

A chart of failure and MTTR assumptions might be handy. I'll express failure and recovery in hours per year. The math is pretty simple. Number of failure per year * hours to recover = hours failed per year. It can be converted to 'nines' later.

| Component | Failures per Year | Hours to Recover | Hours Failed per year |

| WAN Link | .5 | 8 | 4 |

| Routers, Devices | .2 | 4 | .8 |

| SAN Fabric | .2 | 4 | .8 |

| SAN LUN | .1 | 12 | .12 |

| Server | .5 | 8 | 4 |

| Power/Cooling | 1 | 2 | 2 |

WAN links - T1's, DS3's, etc. For all non-redundant WAN links I figure an average of 4 hours per year WAN related network outage. Over hundreds of circuit-years of experience, figuring in backhoes, storms, tornadoes, circuit errors, etc, I figure that one outage every other year with an 8 hour MTTR is a reasonable estimate. Actual data from a couple hundred circuit-years of data is better that the estimate (one outage every 4 years with a 5 hour time to repair).

Routers, switches, firewalls and similar devices, booted from flash, no moving parts other than power supply fans I figure at one failure every 5 years, with the MTTR dependent on service contracts and sparing. If you have spare on hand, or you have good vendor service, your MTTR can be figured at 2 or 4 hours. If not, figure at lest 8 hours. If our network-like devices have disk drives or are based on PC components, I figure their failure rate to be the same as servers (see below).

SAN fabric, properly implemented, I figure at one failure every 5 years as with network devices, but because most SAN fabrics are built redundant, the MTTR is essentially zero, so SAN fabric errors rarely affect availability. The emphasis though, is on 'properly implemented'.

SAN LUN's, properly implemented, I figure at one failure every 10 years. But SAN LUN or controller failure, even though it is rare, likely has a very long MTTR. Recovering from failed LUN's is non-trivial, so at least 8 hours MTTR must be figured, with the potential for more, up to 24 hours.

Servers, with structured system management applied and with redundant disks, I figure at about one 8 hour outage every two years, or about 4 hours per server per year. Servers that are ad hoc managed, or servers without lights out management or redundant power supplies, I figure at about one 8 hour outage per year.

Power and cooling is highly variable. We have one data center where power/cooling issues historically have caused about 8 hours outage per year. We can't remember the last time we have a power issue in our other data center. I figure power/cooling issues on a per site basis, with estimates of 2 hours per year on the low end, and 8 hours per year on the high end.

Your assumptions for failure frequency and recovery time might be different than mine.

Once you have a rough estimate of the availability of the various components in an application stack, you can apply them to your application.

First steps:

- Sketch out your application stack

- Identify each component

- Identify parallel verses serial dependencies

- Identify coupled dependencies

Serial Dependencies increase recovery time. In a chain of components in series, any failed component in the chain causes failure of the entire chain. In this case, the number of failures of the system is the sum of the number of failures of each series component. The recovery time for each component is also added in series.

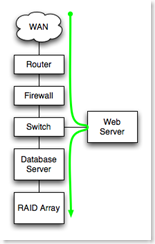

Serial Dependencies increase recovery time. In a chain of components in series, any failed component in the chain causes failure of the entire chain. In this case, the number of failures of the system is the sum of the number of failures of each series component. The recovery time for each component is also added in series.In the example to the right, all components from WAN at the top to RAID array at the bottom are serially dependent. Each must function for the application to function, and failure of a component is a failure of the application.

Parallel Dependencies improve recovery time. Components in parallel, properly configured for some form of automatic load balancing or failover, have an MTTR equal to the failover time for the pair of parallel components. Examples would be Microsoft Clustering Services, high availability firewall pairs, load balanced application servers, etc. In each of those cases, the high availability technology has a certain time window for detecting and disabling the failed component and starting the service on the non-failed parallel component. Parallel component failover and recovery times typically range from a few seconds to a few minutes.

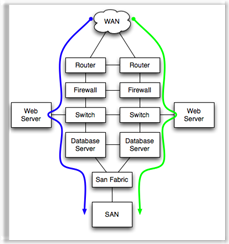

Parallel Dependencies improve recovery time. Components in parallel, properly configured for some form of automatic load balancing or failover, have an MTTR equal to the failover time for the pair of parallel components. Examples would be Microsoft Clustering Services, high availability firewall pairs, load balanced application servers, etc. In each of those cases, the high availability technology has a certain time window for detecting and disabling the failed component and starting the service on the non-failed parallel component. Parallel component failover and recovery times typically range from a few seconds to a few minutes.In the example to the left, if either the green or the blue path is available, the application will function.

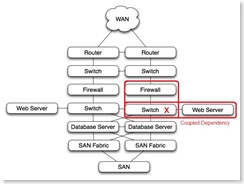

Coupled dependencies, as in the firewall-> switch -> web server stack shown, force the failure of dependent components when a single component fails. In this case, if the switch were to fail, the web sever would also fail, and the firewall, if active, would also see a failed interface and force a failover to the other firewall.

Coupled dependencies, as in the firewall-> switch -> web server stack shown, force the failure of dependent components when a single component fails. In this case, if the switch were to fail, the web sever would also fail, and the firewall, if active, would also see a failed interface and force a failover to the other firewall.Coupled dependencies tend to be complex. Identification and testing of coupled dependencies ensures that the various failure modes of coupled components are well understood and predictable. Technologies like teamed nic's and etherchannel can be used to de-couple dependencies.

On to Part One - Non Redundant Systems