In the Introductory post to this series, I outlined the basics for estimating the availability of simple systems. This post picks up where the first post left off and attempts to look at availability estimates for non-redundant systems.

Let's go back to the failure estimate chart from the introductory post

| Component | Failures per Year | Hours to Recover | Hours Failed per year |

| WAN Link | .5 | 8 | 4 |

| Routers, Devices | .2 | 4 | .8 |

| SAN Fabric | .2 | 4 | .8 |

| SAN LUN | .1 | 12 | .12 |

| Server | .5 | 8 | 4 |

| Power/Cooling | 1 | 2 | 2 |

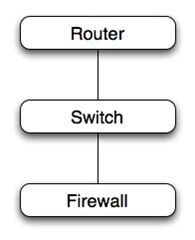

And apply it to a simple stack of three non-redundant devices in series.  Assuming that the devices are all 'boot from flash and no hard drive' we would apply the estimated failures per year and hours to recover in series for each device from the Routers/Devices row of the table. For series dependencies, where the availability of the stack depends on each of the devices in the stack, simply adding the estimated failures and recovery times together gives us an estimate for the entire stack.

Assuming that the devices are all 'boot from flash and no hard drive' we would apply the estimated failures per year and hours to recover in series for each device from the Routers/Devices row of the table. For series dependencies, where the availability of the stack depends on each of the devices in the stack, simply adding the estimated failures and recovery times together gives us an estimate for the entire stack.

For each device:

.2 failures/year * 4 hours to recover = .8 hours/year unavailability.

For three devices in series, each with approximately the same failure rate and recovery time, the unavailability estimate would be the sum of the unavailability of each component, or .8 + .8 + .8 = 2.4 hours/year.

Notice a critical consideration. The more non-redundant devices you stack up in series, the lower your availability. (Notice I made that sentence in bold and italics. I did that 'cause it's a really important concept.)

The non-redundant series dependencies also apply to other interesting places in a technology stack. For example, if I want my really big server to go faster, I add more memory modules so that the memory bus can stripe the memory access across more modules and spend less time waiting for memory access. Those memory modules are effectively serial, non-redundant. So for a fast server, we'd rather have 16 x 1GB DIMMs than 8 x 2GB DIMMs or 4 x 4GB DIMMs. The server with 16 x 1GB DIMMs will likely go faster than the server with 4 x 4DB DIMMs, but it will be 4 times as likely to have memory failure.

Let's look at a more interesting application stack, again a series of non-redundant components.

We'll assume that this is a T1 from a provider, a router, firewall, switch, application/web server, a database server and attached RAID array. The green path shows the dependencies for a successful user experience. The availability calculation is a simple sum of the product of failure frequency and recovery time of each component.

| Component | Failures per Year | Hours to Recover | Hours Failed per year |

| WAN Link | 0.5 | 8 | 4.0 |

| Router | 0.2 | 4 | 0.8 |

| Firewall | 0.2 | 4 | 0.8 |

| Switch | 0.1 | 12 | .12 |

| Web Server | 0.5 | 8 | 4.0 |

| Database Server | 0.5 | 8 | 4.0 |

| RAID Array | 0.1 | 12 | .12 |

| Power/Cooling | 1.0 | 2 | 2.0 |

| Total | 15.8 hours |

The estimate for this simple example works out to be about 16 hours of down time per year, not including any application issues, like performance problems, scalability issues.

- The estimate also doesn't consider the human factor.

- Because numbers we input into the chart are really rough, with plenty of room for error, the final estimate is also just a guesstimate.

- The estimate is the average hours of outage over a number of years, not the number of hours of outage for each year. You could have considerable variation from year to year.

Applying the Estimate to the Real World

To apply this to the real world and estimate an availability number for the entire application, you'd have to know more about the application, the organization, and the persons managing the systems.

For example - assume that the application is secure and well written, and that there are no scalability issues, and assume that the application has version control, test, dev and QA implementations and a rigorous change management process. That application might suffer few if any application related outages in a typical year. Figure one bad deployment per year that causes 2 hours of down time. On the other hand, assume that it is poorly designed, that there is no source code control or structured deployment methodology, no test/QA/dev environments, and no change control. I've seen applications like that have a couple hours a week of down time.

And - if you consider the human factor, that the humans in the loop (the keyboard-chair interface) will eventually mis-configure a device, reboot the wrong server, fail to complete a change within the change window, etc., then you need to pad this number to take the humans into consideration.

On to Part Two (or back to the Introduction?)

No comments:

Post a Comment