It is a Platform or a Religion?

I’m annoyed enough to keep that link in my ‘ToBlog’ notebook for over a year. That’s annoyed, ‘eh?

Apparently the system failed and the blogger decided that all failed systems that happen to be running on Windows fail because they run on windows.

ToBlog Dump – Time to Clean House

Ain’t gonna happen. Time to clean house. I’ll dump the most interesting ones into a few posts & cull the rest.

Thomas Limoncelli: Ten Software Vendor Do’s and Don’ts

Thomas covers non-GUI, scripted and unattended installation, administrative interfaces, API’s, config files, monitoring, data restoration, logging, vulnerability notification, disk management, and documentation. The comments cover more.

When the weather map looks like this….

There is nothing the governments can do to put the genie back into the bottle

The “rich and powerful” are rich and powerful precisely because they have access to information that the rest of us don’t have.And – once you give people the power to access the information:

There is nothing the governments can do to put the genie back into the bottleWikileaks related. A good read.

Log Reliability & Automotive Data Recorders

Toyota's official answer seems to be either "It depends" or "The data retrieved from the EDR is far from reliable", unless the data exonerates them, in which case "the EDR information obtained in those specific incidents is accurate".

There’s got to be a blog post somewhere in that.

The flaw has prompted the company to consider changes in its development process

Apparently we have very, very large corporations chocked full of highly paid analysts, architects, developers and QA staff believing that it is perfectly OK to store banking credentials in plain text on a mobile device a decade into the 21st century.

Something is broke.

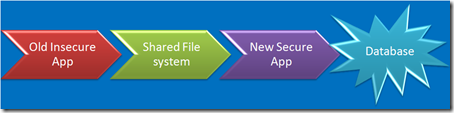

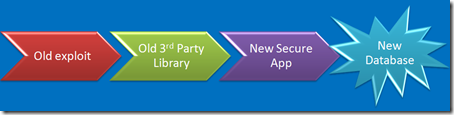

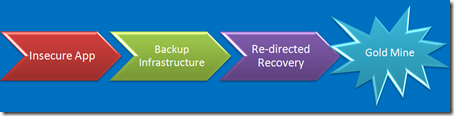

Application Security Challenges

You’ve got de-provisioning down pat, right? No old apps laying around waiting to be exploited? Nobody would ever use the wayback machine to find out where your app used to be, would they?

An associate of mine did the forensics on one like that. Yes, you can upload a Unix rootkit to a blob in a SQL server, execute it directly from the database process and target a nearby Unix server. Heck – you can even load a proxy on the SQL server, poison the Unix servers ARP cache, and proxy all it’s traffic. No need to root it. Just proxy it. Network segmentation anyone?

Yech. I have no clue how someone who downloads [insert module here] from [insert web site here] and builds it into [insert app here] can possibly keep track of the vulnerabilities in [insert module here], update the right modules, track the dependencies, test for newly introduced bugs and keep the whole mess up to date.

Speaking of de-provisioning – Firewalls rules, load balancer rules… Are they routinely pulled as apps are shut down? Have you ever put a new app on the IP addresses of an old application?

That’s that whole single sign-on, single domain thing. It’s cool, but there are times and places where credentials should not be shared. Heresy to the SSO crowd, but valid none the less.

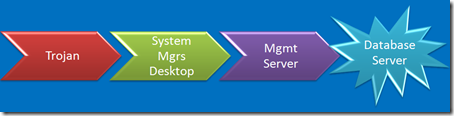

Of course you don’t manage your servers from your desktop, you’ve realized long ago that if it can surf the Internet, it can’t be secured, so you’ve got shiny new servers dedicated to managing your servers and apps. Now that you have them, how about making them a conduit that bridges the gap between insecure and secure?

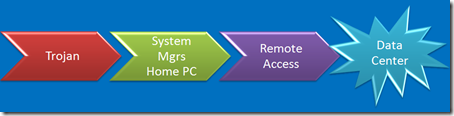

Do you system managers surf the Internet? Hang out at coffee shops? Do you trust their desktops?

Can’t figure out how to hack the really important [secure] systems? How about cloning a LUN and presenting it to the really unimportant [insecure] system? Your really cool storage vendor gives you really cool tools that make that really easy, right?

Capture credentials on the sysadmin’s home computer, try them against the corporate SSH gateway? Don’t worry about it. nobody would ever think to try that. You’ve got two-factor, obviously.

When you are buried deep in your code, believing that your design is perfect, your code is checked, tested and declared perfect, and you think you've solved your security problems, stop & take a look at the security challenges surrounding your app.

I’m not sure what it is, but I’m sure it’s rootable

If you accidentally configure RDS in your Linux kernel, you’ve got something to fix. From what I can see, we can

I guess not.

DNS RPZ - I like the idea

Interesting.

Some thoughts:

I certainly don't think that offering the capability is a bad thing. Nobody is forced to use it.

Individual operators can decide what capability to enable and which blacklists to enable. ISP's could offer their customers resolvers with reputation filters and resolvers without. ISP's can offer blacklisted/greylisted resolvers for their 'family safe' offerings. Corporations/enterprises can decide for themselves what they blacklist.

A reputation-based whitelist would be interesting. Reputation could be determined by the registrar, perhaps based on the registrar having a valid, verified street address, phone and e-mail for the domain owner. A domain that has the above and has been registered for a month or so could be part of a whitelist. A domain that hasn't met the above could be gray listed. Operators could direct those to an internal 'caution' web page.

A downside:

Fast flux DNS based botnets are a significant issue, but I don't think that a blacklist of known-bad domains will solve the problem. If a malware domain is created as a part of a fast flux botnet, a blacklist will never be able to keep up. It could still be useful though. Some malware is hosted on static domains.

Optional:

A domain squatters blacklist. I'd love to be able to redirect address bar typos to an internal target rather than the confusing, misleading web pages that squatters use to misdirect users. I don't care if domain squatters business model is disrupted. They are speculators. They should expect to have their business models disrupted once in a while.

Are we creating more vulnerabilities than we are fixing?

Sept 15th: Apple QuickTime flaws puts Windows users at risk

Sept 14th: Stuxnet attackers used 4 Windows zero-day exploits

Sept 13th: Adobe Flash Player zero-day under attack

Sept 10th: Primitive 'Here you have' e-mail worm spreading fast

Sept 9th: Patch Tuesday heads-up: 9 bulletins, 13 Windows vulnerabilities

Sept 9th: Security flaws haunt Cisco Wireless LAN Controller

Sept 9th: Apple patches FaceTime redirect security hole in iPhone

Sept 8th: New Adobe PDF zero-day under attack

Sept 8th: Mozilla patches DLL load hijacking vulnerability

Sept 8th: Apple plugs drive-by download flaws in Safari browser

Sept 2nd: Google Chrome celebrates 2nd birthday with security patches

Sept 2nd: Apple patches 13 iTunes security holes

Sept 1st: RealPlayer haunted by 'critical' security holes

Aug 24th: Critical security holes in Adobe Shockwave

Aug 24th: Apple patches 13 Mac OS X vulnerabilities

Aug 20th: Google pays $10,000 to fix 10 high-risk Chrome flaws

Aug 19th: Adobe ships critical PDF Reader patch

Aug 19th: HD Moore: Critical bug in 40 different Windows apps

Aug 13th: Critical Apple QuickTime flaw dings Windows OS

Aug 12th: Opera closes 'high severity' security hole

Aug 12th: Security flaws haunt NTLMv1-2 challenge-response protocol

Aug 10th: Microsoft drops record 14 bulletins in largest-ever Patch Tuesday

I'm thinking there's a problem here.

Of course Zero Day only covers widely used software and operating systems - the tip of the iceberg.

Looking at Secunia's list for today, 09/15/2010:

Linux Kernel Privilege Escalation Vulnerabilities

e-press ONE Insecure Library Loading Vulnerability

MP3 Workstation PLS Parsing Buffer Overflow Vulnerability

IBM Lotus Sametime Connect Webcontainer Unspecified Vulnerability

Python asyncore Module "accept()" Denial of Service Vulnerability

AXIGEN Mail Server Two Vulnerabilities

3Com OfficeConnect Gigabit VPN Firewall Unspecified Cross-Site Scripting

Fedora update for webkitgtk

XSE Shopping Cart "id" and "type" Cross-Site Scripting Vulnerabilities

Linux Kernel Memory Leak Weaknesses

Slackware update for sudo

Slackware update for samba

Fedora update for samba

Red Hat update for samba

Red Hat update for samba3x

Google Chrome Multiple Vulnerabilities

Serious question:

Are we creating new vulnerabilities faster than we are fixing old ones?

I'd really like to know.

In some ways this looks like the immature early periods of other revolutionary industries.

We built cars. The early ones were modern wonders that revolutionized transportation and a wide swath of society. After a few decades we figured out that they also were pollution spewing modern wonder death traps. Auto manufactures sold their pollution spewing modern wonder death traps to customers who stood in line to buy them. Manufacturers claimed that there was nothing wrong with there products, that building clean autos with anything resembling safety was impossible, and that safe clean autos would cost so much that nobody could afford them. The customers were oblivious to the obvious. They piled their families into their death traps and drove them 85mph across South Dakota without seat belts (well - my dad did anyway - and he wasn't the fastest one out there, and I'm pretty sure I and my siblings weren't the only kids riding in the back of a station wagon with the tailgate window wide open...).

Some people described it as carnage. Others thought that autos were Unsafe at Any Speed.

Then came the safety & pollution lobbies. It took a few decades, a few hundred million in lobbyists, lawyers and lawsuits, and many more billions in R&D, but we now have autos that are fast, economical, safe and clean. A byproduct - completely unintended - was that autos became very low maintenance and very, very reliable. Maintenance windows went from hundreds of miles between shop visits to thousands of miles between shop visits (for oil changes) and tens of thousands of miles per shop visit (for everything but oil).

We need another Ralph Nader. I don't want to wait a couple decades for the software industry to get its act together.

I'll be too old to enjoy it.

Thoughts on Application Logging

- How to Do Application Logging Right by Anton Chuvakin and Gunnar Peterson

- Application Security Logging by Colin Watson

- and the Common Event Expression (CEE) Architecture Overview [PDF],

ZFS and NFSv4 ACL’s

Obviously I've been very unimpressed with Unix's trivial rwxr-x--- style permissions. Sun band-aided the decades old rwxr-x--- up with POSIX getfacl and setfacl. That was a start. We now have NFSv4 style ACL’s on ZFS. It looks like they are almost usable.

Engineering by Roomba’ing Around

- Start out systematically

- Hit an obstacle

- Change direction

- Hit another obstacle

- Change direction

- Eventually cover the problem space.

Bogus Drivers Licenses, Fake Passports

- Ran the algorithm on 11 million license photos

- Flagged 1 million for manual review

- Of the 100,000 reviewed so far, 1200 licenses were cancelled

Oracle Continues to Write Defective Software, Customers Continue to Buy it

What’s worse:

- Oracle continues to write and ship pathetically insecure software.

Or:

- Customers continue to pay for it.

From the July 2010 Oracle CPU pre release announcement:

| Oracle Product | Vulnerability | Rating | License Cost/Server |

| Database Server | Remote, No Auth[1] | 7.8/10 | $167,000[2] |

Awesome. For a mere $167,000[2] I get the privilege of installing poorly written, remotely exploitable, defective database software on a $5,000 2-socket Intel server.

Impressive, isn’t it.

I’m not sure what a ‘Times-Ten’ server is – but I’m glad we don’t have it installed. The good news is that it’s only half the price of an Enterprise Edition install. The bad news is that it is trivially exploitable (score of 10 on a scale of 1-10).

| Oracle Product | Vulnerability | Rating | License Cost/Server |

| Times-Ten Server | Remote, No Auth[1] | 10/10 | $83,000[3] |

From what I can see from the July 2010 pre-release announcement, their entire product catalog is probably defective. Fortunately I only need to be interested in the products that we have installed and have an Oracle’s CVSS of 6 or greater & are remotely exploitable (the really pathetic incompetence).

If I were to buy a Toyota for $20,000, and if anytime during the first three years the Toyota was determined to be a smoldering pile of defective sh!t, Toyota would notify me and offer to fix the defect at no cost to me other than in inconvenience of having to drive to their dealership and wait in their lobby for a couple hours while they replace the defective parts. If they didn’t offer to replace or repair the defects, various federal regulatory agencies in various countries would force them the ‘fess up to the defect and fix it at no cost to me. Oracle is doing a great job on notification. But unfortunately they are handing me the parts and telling me to crawl under the car and replace them myself.

An anecdote: I used to work in manufacturing as a machinist, making parts with tolerances as low as +/-.0005in (+/-.013mm). If the blueprint called for a diameter of 1.000” +/-.0005 and I machined the part to a diameter of 1.0006” or .9994”, the part was defective. In manufacturing, when engineers designed defective parts and/or machinists missed the tolerances and made defective parts, we called it ‘scrap’ when the part was un-fixable or ‘re-work’ if it could be repaired to meet tolerances. We wrote it up, calculated the cost of repair/re-machining and presented it to senior management. If we did it too often, we got fired. The ‘you are fired’ part happen often enough that myself, my foreman and the plant manager had a system. The foreman invited the soon to be fired employee into the break room for coffee, the plant manager sat down with the employee, handed him a cup of coffee and delivered the bad news. Meanwhile I packed up the terminated employees tools, emptied their locker and set the whole mess out in the parking lot next to their car. The employee was escorted from the break room directly to their car.

Will that happen at Oracle? Probably not.

Another anecdote: Three decades ago I was working night shift in a small machine shop. The owner was a startup in a highly competitive market, barely making the payroll. If we made junk, his customers would not pay him, he’d fail to make payroll, his house of cards would collapse and 14 people would be out of work. One night I took a perfectly good stack of parts that each had hundreds of dollars of material and labor already invested in them and instead of machining them to specification, I spent the entire shift machining them wrong & turning them into un-repairable scrap.

- One shift’s worth of labor wasted ($10/hour at the time)

- One shift’s worth of CNC machining time wasted ($40/hour at the time)

- Hundreds of dollars per part of raw material and labor from prior machining operations wasted

- Thousands of dollars wasted total (the payroll for a handful of employees for that week.)

My boss could have (or should have) fired me. I decided to send him a message that hopefully would influence him. I turned in my timecard for the night (a 10 hour shift) with ‘0’ in the hours column.

Will that happen at Oracle? Probably not.

One more anecdote: Three decades ago, the factory that I worked at sold a large, multi-million dollar order of products to a foreign government. The products were sold as ‘NEMA-<mumble-something> Explosion Proof’. I’m not sure what the exact NEMA rating was. Back in the machine shop, we just called them ‘explosion proof’.

After the products were install on the pipeline in Siberia[4], the factory sent the product out for independent testing & NEMA certification. The product failed. Doh!

Too late for the pipeline in Siberia though. The defective products were already installed. The factory (and us peons back in the machine shop) frantically figured out how to get the dang gear boxes to pass certification. The end result was that we figure out how to re-work the gear boxes in the field and get them to pass. If I remember correctly, the remedy was to re-drill 36 existing holes on each part 1/4” deeper, tap the holes with a special bottoming tap, and use longer, higher grade bolts. To remedy the defect, we sent field service techs to Siberia and had them fix the product in place.

The factory:

- Sold the product as having certain security related properties (safe to use in explosive environments)

- Failed to demonstrate that their product met their claims

- Figured out how to re-manufacture the product to meet their claims

- Independently certified that the claims were met.

- Upgraded the product in the field at no cost to the customer

Oracle certainly has met conditions #1, #2 and #3 above. Will they take action #4 and #5?

Probably not.

[1]Remotely exploitable - no authentication required implies that any system that can connect to the Oracle listener can exploit the database with no credentials, no session, no login, etc. In Oracles words: “may be exploited over a network without the need for a username and password”

[2]Per Core prices: Oracle EE $47,000, Partitioning $11,500, Advanced Security $11,500, Diag Pack $5000, Tuning Pack $5000, Patch Management $3500. Core factor of 0.5, discount of 50% == $167,000. YMMV.

[3]All other prices calculated as list price * 8 cores * .5 core factor * 50% discount.

[4]I have no idea if the pipeline was the infamous pipeline that made the headlines in the early 1980’s or not, nor do I know if it is the one that is rumored to have been blown up by the CIA by letting the Soviets steal defective software. We made gearboxes that opened and closed valves, not the software that drove them. We were told by management that these were ‘on a pipeline in Siberia’.

Let’s Mix Critical Security Patches and Major Architecture Changes and see What Happens.

“Yes, this was an unusual release, and an experiment in shipping new features quicker than our major release cycle normally allows.”On version 3.6.n, plugins shared process space. On 3.6.n+1, plugins do not.

The experiment appears to have suffered a setback.

Sun/Oracle Finally Announces ZFS Data Loss Bug

If you’ve got a Sun/Oracle support login, you can read that an "Abrupt System Reboot may Lead to ZFS Filesystem Data Integrity Issues" on all Solaris kernels up through April 2010.

“Data written to a Solaris ZFS filesystem and confirmed by fsync(3C) may be lost in the event of an abrupt system reboot.”

This announcement came too late for us though.

If I am a customer of an ‘enterprise’ vendor with millions of dollars of that vendors hardware/software and hundreds of thousands in annual maintenance costs, I expect that vendor will proactively alert me of storage related data loss bugs. I don’t think that’s too much to expect, as vendors with which I do far less business with have done so for issues of far less consequence.

Sun failed.

Hopefully Oracle will change how incidents like this are managed.

Another Reason for Detailed Access Logs

This statement is interesting though:

“[company spokesperson] said it's unclear how many customers' information was viewed, but that letters were sent to 230,000 Californians out of an "abundance of caution.”

What’s an Important Update?

Windows update runs (good).

Windows update classifies some updates as important, and some updates as optional (good).

Windows update decides that a Silverlight update is important. It appears security related (good) but also add features (maybe good, maybe bad).

Windows update decides that a security definition update is optional (bad).

How can a definition update for a signature based security product be optional? That’s annoying, ‘cause now I have to make sure to check optional updates just in case they’re important.

Where are your administrative interfaces, and how are they protected?

One of the many things that keeps me awake at night:

For each {application|platform|database|technology} where are the administrative interfaces located, and how are they protected?

I've run into administrative interface SNAFU's on both FOSS and purchased software. A common problem is applications that present an interface that allows access to application configuration and administration via the same ports and protocols as the application user interface. A good example is Twitter, where hacker-useful support tools were exposed to the Internet with ordinary authentication.

In the case of the Pennsylvania school spy cam caper, the 'administrative interface' that the school placed on the laptops apparently is relatively easy to exploit, and because they sent the students home with the district laptops, the interface is/was exploitable from the Internet.

Years ago one of our applications came with a vendor provided Tomcat install configured with the Tomcat management interface (/manager/*) open to the Internet on the same port as the application, ‘secured’ with a password of 'manager', without the quotes. Doh!

The most recent JMX console vulnerability show us the type of administrative interface that should never, ever be exposed to the Internet. (A Google search shows that at least a few hundred JMX consoles exposed to the Internet.)

I try to get a handle on ‘rogue’ administrative interfaces by white listing URL’s in the load balancers. I’ll ask the vendor for a list of top level URL’s and build regex rules for them. (/myapp/*, /anotherapp/*, etc.). When the list includes things like /manager/*, /admin/*, /config/* we open up a dialogue with the vendor.

Our standard RFP template asks the vendor for information on the location and security controls for all administrative and management interfaces to their application. We are obviously hoping that they are one step ahead of us and they've built interfaces that allow configuration & management of the product to be forced to a separate 'channel' of some sort (a regex-able URL listening on a separate port, etc.).

Some vendors 'get it'.

Some do not.

Usenet services have been made unnecessary…

Duke University, 2010

The end of an era.

Major ISP’s have been shedding their Usenet services for years, but when the originator of the service dumps it, the Internet really ought to mark a date on a global calendar somewhere.

IPv6 Tunnels & Solaris

Following Dan Anderson’s instructions, I set up an IPv6 tunnel and put my home network on IPv6. It was surprisingly easy. I have an OpenSolaris server acting as the tunnel end point and IPv6 router, with IPv6 tunneled to Hurricane Electric, and didn’t spend much more than an hour doing it.

Following Dan’s instructions, I:

Signed up at Hurricane Electrics tunnel broker service, requested a /64 & created a tunnel

- Configured my OpenSolaris server as a tunnel end point

- Configured Solaris’s IPv6 Neighbor Discovery Protocol (NDP) service & reloaded it

- Pointed my devices at HE’s DNS’s

- ‘Bounced’ the wireless adapters on my various notebooks, netbooks and Mac’s

I didn’t have to reboot anything – and better yet – when I did reboot the various devices, IPv6 still worked.

I’m not sure why I needed to use HE’s name servers, but things started working a lot better when I did, and their name servers seem to work as good as anyone’s.

I think I got lucky – my DLINK DIR-655 home router/access point routes protocol 41 just fine. No configuration necessary.

I don’t have a static IP, so when my ISP moves me around, I’ll have to log in to Tunnel Broker and tweak the tunnel end point. That shouldn’t be a big deal – my ISP only changes my IP address once a year or so.

The ‘ShowIP’ Firefox plugin was very useful. It makes it clear when I’m using V6 vs V4.

Security considerations? I’ll tack them on to the end of the project and address them after implementation.

Oracle/Sun ZFS Data Loss – Still Vulnerable

There are two distinct bugs, one fsync() related, the other sync() related. Update 8 may fix 6791160 zfs has problems after a panic, but

Bug ID 6880764 “fsync on zfs is broken if writes are greater than 32kb on a hard crash and no log attached”

is apparently not resolved until 142900-09 released on 2010-04-20.

We do not retest System [..] every time a new version of Java is released.

This post’s title is a quote from Oracle technical support on a ticket we opened to get help running one of their products on a current, patched JRE.

Oracle’s response:

“1. Please do not upgrade Java if you do not have to

2. If you have to upgrade Java, please test this on your test server before implemeting [sic] on production

3. On test and on production, please make a full backup of your environment (files and database) before upgrading Java and make sure you can roll back if any issue occurs.”

In other words – you are on your own. The hundreds of thousands of dollars in licensing fees and maintenance that you pay us don’t do you sh!t for security.

Let’s pretend that we have a simple, clear and unambiguous standard: ‘There will be no unpatched Java runtime on any server’.

There isn’t a chance in hell that standard can be met.

This seems to be a cross vendor problem. IBM’s remote server management requires a JRE on the system that has the application that connects to the chassis and allows remote chassis administration. As far as we can tell, and as far as IBM’s support is telling us, there is no possibility of managing an IBM xSeries using a patched JRE.

“It is not recommended to upgrade or change the JRE version that's built inside Director. Doing so will create an unsupported configuration as Director has only been tested to work with its built-in version.”

We have JRE’s everywhere. Most of them are embedded in products. The vendors of the products rarely if ever provide security related updates for their embedded JRE’s. When there are JRE updates, we open up support calls with them watch them dance around while they tell us that we need to leave them unpatched.

My expectations? If a vendor bundles or requires third party software such as a JRE, that vendor will treat a security vulnerability in the dependent third party software as though it were a vulnerability in their own software, and they will not make me open up support requests for something this obvious.

It’s the least they could do.

Bit by a Bug – Data loss Running Oracle on ZFS on Solaris 10, pre 142900-09 (was: pre Update 8)

We recently hit a major ZFS bug, causing the worst system outage of my 20 year IT career. The root cause:

Synchronous writes on ZFS file systems prior to Solaris 10 Update 8 are not properly committed to stable media prior to returning from fsync() call, as required by POSIX and expected by Oracle archive log writing processes.

On pre Update 8 MU8 + 142900-09[1], we believe that a programs utilizing fsync() or O_DSYNC writes to disk are displaying buffered-write-like behavior rather than un-buffered synchronous writes behavior. Additionally, when there is a disk/storage interruption on the zpool device and a subsequent system crash, we see a "rollback" of fsync() and O_DSYNC files. This should never occur, as write with fsync() or O_DSYNC are supposed to be on stable media when the kernel call returns.

If there is a storage failure followed by a server crash[2], the file system is recovered to an inconsistent state. Either blocks of data that were supposedly synchronously written to disk are not, or the ZFS file system recovery process truncates or otherwise corrupts the blocks that were supposedly synchronously written. The affected files include Oracle archive logs.

We experienced the problem on an ERP database server when an OS crash caused the loss of an Oracle archive log, which in turn caused an unrecoverable Streams replication failure. We replicated problem in a test lab using a v240 with the same FLAR, HBA’s, device drivers and a scrubbed copy of the Oracle database. After hundreds of test and crashes over a period of weeks, were able to re-create the problem with a 50 line ‘C’ program that perform synchronous writes in a manner similar to the synchronous writes that Oracle uses to ensure that archive logs are always consistent, as verified by dtrace.

The corruption/data loss is seen under the following circumstances:

- Run a program that synchronously writes to a file

OR

Run a program that asynchronously write to a file with calls to fsync().

- Followed by any of:[2]

- SAN LUN un-present

- SAN zoning error

- local spindle pull

- Then followed by:

- system break or power outage or crash recovery

Post recovery, in about half or our test cases, blocks that were supposedly written by fsync are not on disk after reboot.

As far as I can tell, Sun has not issued any sort of alert on the data loss bugs. There are public references to this issue, but most of them are obscure and don’t clearly indicate the potential problem:

- “MySQL cluster redo log gets truncated after a uadmin 1 0 if stored on zfs” (Sunsolve login required)

- “zfs has problems after a panic”

- “ZFS dataset sometimes ends up with stale data after a panic :

- “kernel/zfs zfs has problems after a panic - part II” Which contains a description: "But sometimes after a panic you see that one of the datafile is completely broken, usually on a 128k boundary, sometimes it is stale data, sometimes it is complete rubbish (deadbeef/badcafe in it, their system has kmem_flags=0x1f), it seems if you have more load you get random rubbish."

And:

- “fsync on zfs is broken if writes are greater than 32kb on a hard crash and no log attached”

From the Sun kernel engineer that worked our case:

Date 08-MAR-2010

Task Notes : […] Since S10U8 encompasses 10+ PSARC features and 300+ CR fixes for ZFS, and the fixes might be inter-related, it's hard to pinpoint exactly which ones resolve customer's problem.For what it’s worth, Sun support provided no useful assistance on this case. We dtrace’d Oracle log writes, replicated the problem using an Oracle database, and then – to prevent Sun from blaming Oracle or our storage vendor - replicated the data loss with a trivial ‘C’ program on local spindles.

Once again, if you are on Solaris 10 pre Update 8 Update 8 + 142900-09[1] and you have an application (such as a database) that expects synchronous writes to still be on disk after a crash, you really need to run a kernel from Update 8 or newer (Oct 2009) Update 8 + 14900-09 dated 2010-04-22 or newer[1] .

[1] 2010-04-25: Based on on new information and a reading of 142900-09 released Apr/20/2010, MU8 alone doesn’t fully resolve the known critical data loss bugs in ZFS.

The read is that there are two distinct bugs, one fsync() related, the other sync() related. Update 8 may fix “6791160 zfs has problems after a panic”, but

Bug ID 6880764 “fsync on zfs is broken if writes are greater than 32kb on a hard crash and no log attached”

is not resolved until 142900-09 on 2010-04-22.

Another bug that is a consideration for an out of order patch cycle and rapid move to 142900-09:

Bug ID 6867095: “User applications that are using Shared Memory extensively or large pages extensively may see data corruption or an unexpected failure or receive a SIGBUS signal and terminate.”

This sounds like an Oracle killer.

[2]Or apparently a server crash alone.

Phishing Attempt or Poor Customer Communications?

The e-mail, as received earlier today:

From: gcss-case@hpordercenter.comThe interesting SMTP headers for the e-mail:

Subject: PCC-Cust_advisory

Dear MIKE JAHNKE,

HP has identified a potential, yet extremely rare issue with HP

BladeSystem c7000 Enclosure 2250W Hot-Plug Power Supplies manufactured prior to March 20, 2008. This issue is extremely rare; however, if it does occur, the power supply may fail and this may result in the unplanned shutdown of the enclosure, despite redundancy, and the enclosure may become inoperable.

HP strongly recommends performing this required action at the customer's earliest possible convenience. Neglecting to perform the required action could result in the potential for one or more of the failure symptoms listed in the advisory to occur. By disregarding this notification, the customer accepts the risk of incurring future power supply failures.

Thank you for taking our call today, as we discussed please find Hewlett Packard's Customer Advisory - Document ID: c01519680.

You will need to have a PDF viewer to view/print the attached document.

If you don't already have a PDF viewer, you can download a free version from Adobe Software, www.adobe.com

Received: from zoytoweb06 ([69.7.171.51]) by smtp1.orderz.com with Microsoft SMTPSVC(6.0.3790.3959);The interesting observations:

Fri, 16 Apr 2010 10:22:02 -0500

Return-Path: gcss-case@hpordercenter.com

Message-ID: 5A7CB4C6E58C4D9696B5F867030D280C@domain.zoyto.com

- They spelled my name wrong and used ‘Mike’ not ‘Michael’

- The source of the e-mail is not hp.com, nor is hp.com in any SMTP headers. The headers reference hpordercenter.com, Zyoto and orderz.com

- hpordercenter.com Zyoto and orderz.com all have masked/private Whois information.

- The subject is “PCC-Cust_advisory”, with – and _ for word spacing

- Embedded in the e-mail is a link to an image from the Chinese language version of HP’s site: http://….hp-ww.com/country/cn/zh/img/….

- There is inconsistent paragraph spacing in the message body

- It references a “phone conversation from this morning” which didn’t occur. There was no phone call.

- It attempts to convey urgency (“customer accepts risk…”)

- It references an actual advisory, but the advisory is 18 months old and hasn’t been updated in 6 months.

- Our HP account manager hasn’t seen the e-mail and wasn’t sure if it was legit.

The attached PDF (yes, I opened it…and no, I don’t know why…) has a URL across the top in a different font, as though it was generated from a web browser:

Did I get phished?

If so, there’s a fair chance that I’ve just been rooted, so I:

- Uploaded the PDF to Wipawet at the UCSB Computer Security Lab. It showed the PDF as benign.

- Checked firewall logs for any/all URL’s and TCP/UDP connections from my desktop at the time that I opened the PDF and again after a re-boot. There are no network connections that aren’t associated with known activity.

Damn – what a waste of a Friday afternoon.

3.5 Tbps

Interesting stats from Akamai:

- 12 million requests per second peak

- 500 billion requests per day

- 61,000 servers at 1000 service providers

The University hosts an Akamai cache. My organization uses the University as our upstream ISP, so we benefit from the cache.

The Universities Akamai cache also saw high utilization on Thursday and Friday of last week. Bandwidth from the cache to our combined networks nearly doubled, from about 1.2Gbps to just over 2Gbps.

The Akamai cache works something like this:

- Akamai places a rack of gear on the University network in University address space, attached to University routers.

- The Akamai rack contains cached content from Akamai customers. Akamai mangles DNS entries to point our users to the IP addresses of the Akamai servers at the University for Akamai cached content.

- Akamai cached content is then delivered to us via their cache servers rather than via our upstream ISP’s.

It works because:

- the content provider doesn’t pay the Tier 1 ISP’s for transport

- the University (and us) do not pay the Tier 1 ISP’s for transport

- the University (and us) get much faster response times from cached content. The Akamai cache is connected to our networks via a 10Gig link and is physically close to most of our users, so that whole propagation delay thing pretty much goes away

The net result is that something like 15-20%of our inbound Internet content is served up locally from the Akamai cache, tariff free. A win for everyone (except the Tier 1’s).

This is one of the really cool things that makes the Internet work.

Via CircleID

Update: The University says that the amount of traffic we pull from Akamai would cost us approximately $10,000 a month or more to get from an ISP. That’s pretty good for a rack of colo space and a 10G port on a router.

The Internet is Unpatched – It’s Not Hard to See Why

ATM Skimmers- a conversation

I normally use an ATM located in the reception area of the headquarters of the state police. It's just across the skyway from where I work, and most importantly, it's owned by a credit union that doesn't charge me for withdrawals. Free ATM's are a good thing.

A couple months ago, as I was about to slide my card, I looked up at the ceiling, scanned the nearby walls, grabbed the card reader and wiggled it up and down a bit, and bent down and looked at the bottom of the reader.

The receptionist look at me, 'um - can I help you?'

Me: "I was just thinking about the probability of finding a card skimmer on an ATM in the lobby of the state police headquarters. Do you supposed it's possible?"

Her: (laugh) "I heard about those - how do they work?"

Me: [short explanation]

Her: "I don't know if I could tell the difference."

Me: "I'm not sure I can either..."

We both agreed that it was unlikely that someone would be able to skim this particular ATM. The receptionist is always behind the desk, facing the ATM; the lobby is only open business hours, and that because of heavy use by law enforcement officers, odds are it would be detected.

I like that ATM.

There's a new dance that's popular now, called the 'Skimmer Squint'. It's when the patron bends over & looks up at the bottom of the card reader, peers into the slot, steps back, glances left, right, up & down, grabs the reader and jiggles it...

Q2KU59J6YRVP

O Broadband, Broadband, Wherefore Art Thou Broadband?

Payroll Processor Hacked, Bank Accounts Exposed

F

rom the Minneapolis Star Tribune:

“A hacker attack at payroll processing firm Ceridian Corp. of Bloomington has potentially revealed the names, Social Security numbers, and, in some cases, the birth dates and bank accounts of 27,000 employees working at 1,900 companies nationwide”

A corporation gets hacked, ordinary citizens get screwed. It happens so often that it’s hardly news.

This is interesting to me because Ceridian is a local company and the local media picked up the story. That’s a good thing. I’m glad our local media is still able to hire professional journalists. The executives of a company that fail like that need to read about themselves in their local paper and watch themselves on the evening news. They might learn something. If we’re lucky, the hack might even get mentioned at the local country club and the exec’s might get a second glance from the other suits.

We aren’t that lucky.

In a follow up story, the Star Tribune interviewed a man who claims that he has not had a relationship with Ceridian for 10 years, yet Ceridian notified him that his data was also stolen. The Star Tribune reports that Ceridian told the victim that the compromise of 10 year inactive customer data was due to a ‘computer glitch’:

“a Ceridian software glitch kept it in the company's database long after it should have been deleted.”

Sorry to disappoint the local media, but computer glitches are not the reason that 10 year old data is exposed to hackers.

Brain dead management is the cause.

But even brain dead management occasionally shows sings of life. According to the customer whose 10 year old data was breached:

"The woman from Ceridian said they're working on removing my information from the database now,”

Gee thanks. What’s that horse-barn-door saying again?

Given corporate America’s aversion to ‘DELETE FROM…WHERE…’ queries, my identity and financial information is presumably vulnerable to exposure by any company that I’ve had a relationship with at any time since computers were invented.

That’s comforting.

Home Energy – Greenyness (or cheapness)

For me, saving energy is nothing new. We grew up getting yelled at for leaving the fridge door open, we set our thermostat back before set-back thermostats were fashionable, and in winter we left the water in the bath tub until it had cooled so the heat from the tub water would warm the house instead of the drain (I ain’t paying to heat the $#*&$^ drain!). My dad super-insulated our house before the 1973 oil crisis. We weren’t saving energy to be green though, we were just cheap.

-

Cargo Cult: …imitate the superficial exterior of a process or system without having any understanding of the underlying substance --Wikipe...

-

Structured system management is a concept that covers the fundamentals of building, securing, deploying, monitoring, logging, alerting, and...

-

In The Cloud - Outsourcing Moved up the Stack [1] I compared the outsourcing that we do routinely (wide area networks) with the outsourcing ...