The future:

Cameras will be ubiquitous. Storage will be effectively infinite. CPU processing power will be effectively infinite. Cameras will detect a broad range of electromagnetic spectrum. The combination of cameras everywhere and infinite storage will inevitably result in all persons being under surveillance all the time. When combined with infinite processor power and recognition software, it will be impossible for persons to move about society without being observed by their government.

All governments eventually are corrupted and when corrupted will misuse the surveillance data. There is no particular reason to think that this is political party or left/right specific. Although it currently is fashionable to think that the right is evil and the left is good, there is no reason to think this will be the case in the future. The only certainty is that the party in power will misuse the data to attempt to control their ‘enemies’, whomever they might perceive them be at the time.

Surveillance advocates claim that cameras are simply an extension of law enforcement's eyes and therefore are not a significant new impingement on personal freedom.

I disagree.

Here’s how I’d build a surveillance system that allows the use of technology to maximize law enforcement effectiveness yet provide reasonable controls on the use of the surveillance against the population as a whole.

- The cameras are directly connected to a control room of some sort. The control room is monitored by sworn, trained law enforcement officers. The officers watch the monitors.

- The locations of the cameras are well known.

- All cameras record to volatile memory only. The capacity of the volatile memory is a small, on the order of one hour or so. Unless specific action is taken, all recorded data more than one hour old is automatically and irretrievably lost. A ring buffer of some sort.

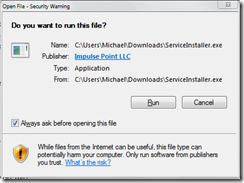

- If a sworn officer sees a crime, the sworn officer may switch specific cameras to non-volatile storage. The action to switch a camera from volatile to non volatile storage is deliberate and only taken when an officer sees specific events that constitute probable cause that a crime is being committed, or when a crime has been reported to law enforcement. Each instance of the use of non-volatile storage is recorded, documented and discoverable by the general public using some well defined process.

- Once a camera is switched to non-volatile storage, it automatically reverts to volatile storage after a fixed time period (one hour, for example), unless the sworn officer repeatedly toggles the non-volatile switch on the camera.

- The non-volatile storage automatically expires after a fixed amount of time (24 hours, for example). If law enforcement believes that a crime has occurred and that the video will be evidence in the crime, law enforcement obtains a court order to retain the video evidence and move it to permanent storage. The court order must be for a specific crime and must name specific cameras and times.

- When a court so orders, the video is moved from non-volatile storage to whatever method law enforcement uses for retaining and managing evidence. If the court order is not obtained within the non-volatile expiration period, the video is irretrievably deleted. If the court order is obtained, the video becomes subject to whatever rules govern evidence in the legal jurisdiction of the cameras.

In the case of a 9/11 or 7/7 type of event, the officer would simply toggle all cameras non-volatile mode and would continue to re-enable non-volatile mode every hour for as long as necessary (days, if necessary). The action of toggling the cameras would again be recorded, documented and discoverable.

To prevent the system from being subverted by corrupt law enforcement, (think J Edgar Hoover and massive illegal surveillance) the systems would be physically sealed, the software and storage for the non-volatile and volatile storage would be unavailable to law enforcement.

There would be some form of crypto/hash/signing that enables tracking the recordings back to a specific camera and assures that the recordings have not been altered by law enforcement.

The key concepts are:

- the system defaults to automatically destroying all recordings automatically.

- a sworn officer of the law must observe an event before triggering non-volatile storage.

- specific actions are required to store the recordings

- those actions are logged, documented and discoverable.

- a court action of some sort is required for storage of any recording beyond a short period of time.

- the system would be tamper-proof. The act of law enforcement tampering with the systems to defeat the privacy controls would be a felony.

- the system would maintain the integrity of the recordings for as long as the video exists.

And most importantly, the software would be open source.